Nvidia Benefiting from DeepSeek in AI Training While Broadcom and Marvell Gain from AI Inference

The Market’s Misunderstanding of AI Infrastructure

The AI sector is experiencing rapid expansion, with Nvidia leading in AI compute, while Broadcom and Marvell are strengthening their positions in AI networking and inference. When DeepSeek launched last month, investors incorrectly assumed that Broadcom and Marvell would gain at Nvidia’s expense. This misunderstanding led to a temporary sell-off in Nvidia’s stock, while Broadcom and Marvell saw brief gains.

Nvidia’s latest earnings prove that this assumption was incorrect. CEO Jensen Huang made it clear during the earnings call that DeepSeek’s emergence is increasing demand for Nvidia’s AI GPUs. More AI companies building large-scale models means a greater demand for the hardware that trains and deploys them, reinforcing Nvidia’s leadership in AI training.

At the core of this misinterpretation is the distinction between AI training and AI inference and the different roles that Nvidia, Broadcom, and Marvell play in AI infrastructure.

AI Training vs. AI Inference: Nvidia, Broadcom, and Marvell’s Roles

Nvidia dominates AI training by providing the GPU compute power necessary to develop and refine AI models. Its Blackwell and Hopper GPUs remain the industry standard for AI workloads, used by enterprises and hyperscalers to train large neural networks. AI training requires an immense amount of computational power, often running on clusters of thousands of GPUs for extended periods. Nvidia’s CUDA software ecosystem ensures that its GPUs remain indispensable for AI training, solidifying its market position.

Broadcom benefits from AI inference by supplying custom AI ASICs to hyperscalers such as Google, Microsoft, and Amazon. These companies develop homegrown inference chips to optimize AI workloads, leveraging Broadcom’s ASICs and networking solutions to improve performance at scale. Broadcom is also a key provider of high-speed networking silicon, including Ethernet and silicon photonics solutions that connect Nvidia’s GPU clusters inside data centers.

Marvell plays a crucial role in AI infrastructure by designing networking and storage chips that support AI workloads. Like Broadcom, Marvell provides solutions for AI inference and data transport but does not compete in AI compute.

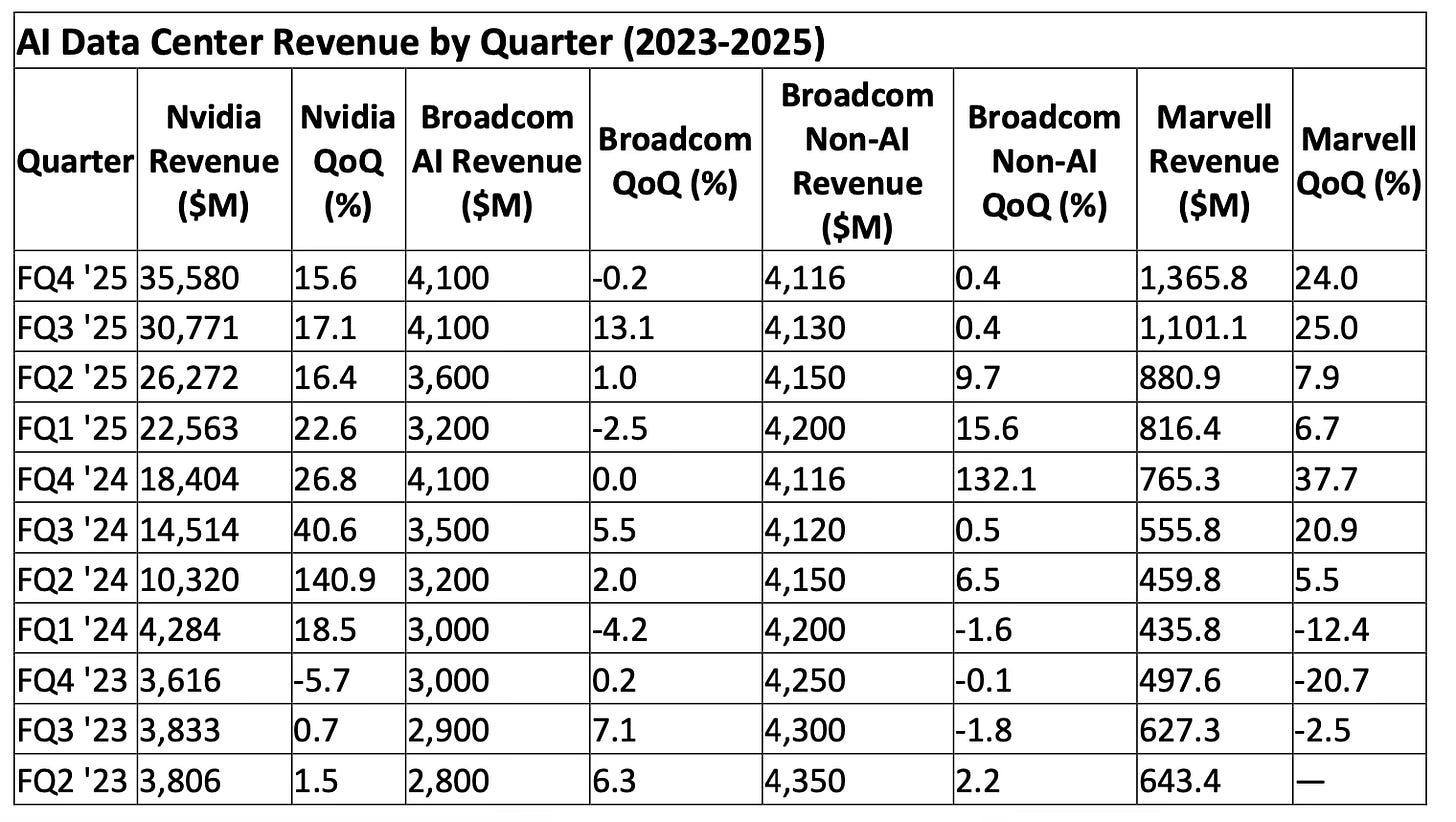

Rather than competing with Nvidia, Broadcom and Marvell supply the infrastructure necessary for Nvidia-powered AI workloads to operate at scale. The table below highlights AI revenue trends across these three companies, showing Nvidia’s dominance in training while Broadcom and Marvell benefit from inference and networking.