Nvidia Fighting Back Against Broadcom and Marvell With its Own ASIC Inference Chips for Data Centers (Updated)

Nvidia has redefined the AI hardware market with its powerful GPUs, which dominate AI training tasks globally. However, the rapidly evolving demand for inference solutions in hyperscale data centers is prompting the company to challenge Broadcom and Marvell in the ASIC (application-specific integrated circuit) inference chip market. This strategic shift highlights Nvidia's focus on diversifying its offerings and capitalizing on the exponential growth of the AI inference market.

ASICs vs. GPUs: The Shift in AI Workloads

GPUs are the cornerstone of AI training, offering unparalleled parallel processing power to handle large datasets and complex computations. However, inference—the process of applying trained models to make predictions or classifications—has different requirements. Inference workloads prioritize low latency, high throughput, and energy efficiency, areas where ASICs excel.

Why ASICs are Replacing GPUs for Inference

ASICs are custom-designed for specific workloads, achieving higher performance-per-watt than general-purpose GPUs. Their cost-effectiveness, scalability, and tailored design make them ideal for inference tasks at scale. This shift has driven a growing adoption of ASICs by hyperscalers aiming to optimize their data center infrastructure for emerging AI workloads.

DeepSeek does not develop its own ASICs. Instead, it has emerged as a disruptive force in AI inference by optimizing AI models using Nvidia's H800 GPUs. Unlike Nvidia, Broadcom, or Marvell, DeepSeek is focused on cost-efficient inference through software-based optimizations rather than specialized silicon. This presents an alternative path to reducing inference costs, slowing the immediate transition to ASICs in certain AI workloads.

AI Hardware Market Overview

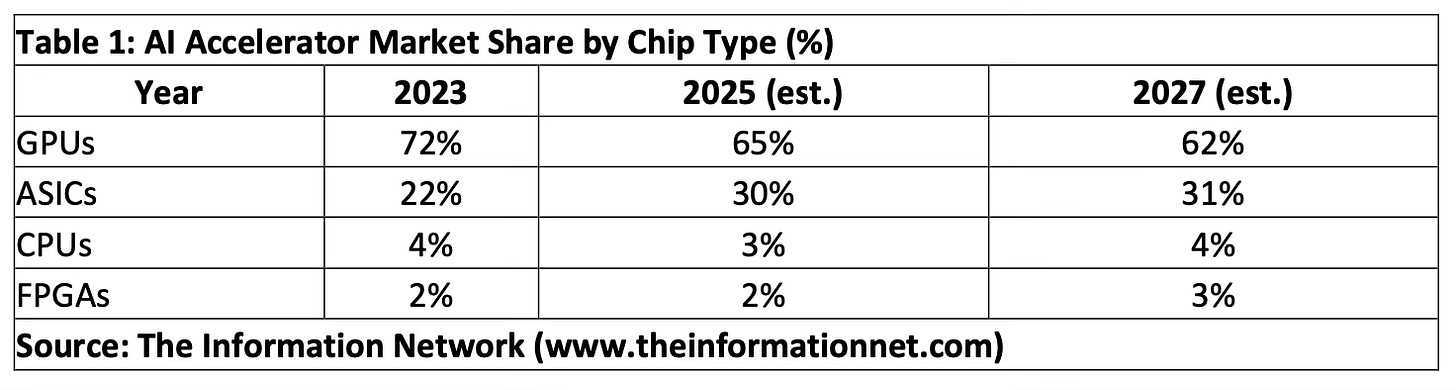

The AI hardware market is segmented into training and inference, with GPUs currently dominating training tasks. However, the inference segment is experiencing faster growth due to widespread AI adoption across industries.

DeepSeek’s software-driven AI inference efficiency may slow the shift from GPUs to ASICs, slightly adjusting ASIC projections downward from my pre-DeepSeek analysis.

Inference vs. Training: Revenue Growth

The inference AI chip market is projected to grow exponentially, driven by applications in natural language processing, recommendation systems, and edge AI. This growth contrasts with the relatively mature training market, which remains dominated by GPUs.

The AI inference market is still expected to reach nearly $155 billion by 2030, and DeepSeek’s efficiency optimizations could strengthen the position of GPUs in inference, allowing them to retain market share longer before ASIC adoption overtakes them.

GPU vs. ASIC Chips: Training vs. Inference Segmentation

GPUs continue to lead in training applications, where their flexibility and power are indispensable. However, ASICs are increasingly preferred for inference due to their tailored designs and efficiency, as shown in Table 3.