Nvidia Fighting Back Against Broadcom and Marvell With its Own ASIC Inference Chips for Data Centers

Nvidia Doesn’t Compete Against Marvell or Broadcom - Yet

Nvidia has redefined the AI hardware market with its powerful GPUs, which dominate AI training tasks globally. However, a common misconception among investors is that Nvidia directly competes with companies like Broadcom and Marvell. In reality, Nvidia focuses on designing and selling GPU chips primarily for AI training, while Broadcom and Marvell specialize in ASICs tailored for AI inference. This distinction became evident on December 9, 2024, when Marvell reported strong earnings, leading to a 13% surge in its stock price. Despite this positive development in the semiconductor sector, Nvidia's stock experienced a decline, as investors mistakenly perceived Marvell's success as a threat to Nvidia's market position

In addition, on December 7, 2024, when Broadcom reported strong earnings, leading to a 24% surge in its stock price. Despite this positive development in the semiconductor sector, Nvidia's stock declined by 2.3% on the same day, as investors mistakenly perceived Broadcom's success as a threat to Nvidia's market position.

Nvidia’s GPUs for Training and Broadcom’s and Marvell’s ASICs for Inference

To clarify, AI training and inference serve different functions in the development and deployment of artificial intelligence. Training is the initial phase where an AI model learns to recognize patterns by analyzing vast amounts of data. This process requires significant computational power, which is where Nvidia's GPUs excel. Inference, on the other hand, is the application of a trained AI model to new, unseen data to make predictions or decisions. This phase demands efficient, real-time processing, a domain where ASICs from companies like Broadcom and Marvell are optimized to perform.

Understanding the distinct roles of AI training and inference, as well as the specialized hardware each requires, is crucial for investors. Recognizing that Nvidia, Broadcom, and Marvell operate in complementary segments of the AI hardware market can lead to more informed investment decisions and a clearer perspective on market dynamics.

Nvidia Doesn’t Compete against DeepSeek Either

The impact of DeepSeek's emergence on Nvidia's stock further illustrates this misconception. On January 27, 2025, DeepSeek launched its R1 AI model, which achieved performance comparable to leading Western models but at a significantly lower cost. This announcement led to a substantial sell-off in Nvidia's shares, with the stock plummeting 17% and erasing nearly $600 billion in market value.

Investors reacted out of concern that DeepSeek's cost-effective AI solutions could undermine Nvidia's position in the AI hardware market. However, this reaction overlooked the fact that DeepSeek's innovations pertain primarily to AI inference, a segment where Nvidia is less focused. Consequently, the perceived threat to Nvidia was based on a misunderstanding of the distinct roles each company plays within the AI ecosystem.

DeepSeek's models enhance the capabilities of Chinese-made AI processors, narrowing the performance gap with their U.S. counterparts. While Nvidia continues to lead in AI training with its advanced GPUs, DeepSeek's innovations in inference offer alternative solutions, particularly in markets aiming to reduce reliance on foreign technology. Therefore, Nvidia and DeepSeek address different aspects of the AI hardware spectrum, with Nvidia concentrating on training and DeepSeek on inference, minimizing direct competition between the two companies.

ASICs vs. GPUs: The Shift in AI Workloads

GPUs are the cornerstone of AI training, offering unparalleled parallel processing power to handle large datasets and complex computations. However, inference—the process of applying trained models to make predictions or classifications—has different requirements. Inference workloads prioritize low latency, high throughput, and energy efficiency, areas where ASICs excel.

Why ASICs are Replacing GPUs for Inference

ASICs are custom-designed for specific workloads, achieving higher performance-per-watt than general-purpose GPUs. Their cost-effectiveness, scalability, and tailored design make them ideal for inference tasks at scale. This shift has driven a growing adoption of ASICs by hyperscalers aiming to optimize their data center infrastructure for emerging AI workloads.

AI Hardware Market Overview

The AI hardware market is segmented into training and inference, with GPUs currently dominating training tasks. However, the inference segment is experiencing faster growth due to widespread AI adoption across industries.

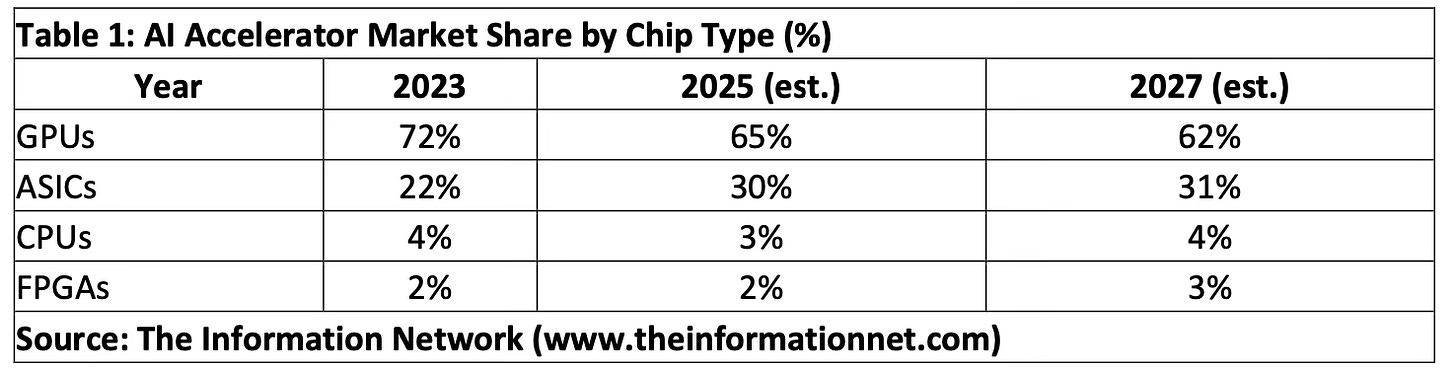

Table 1 underscores GPUs' dominance in AI hardware, especially for training, while ASICs' share is steadily increasing due to their advantages in inference tasks.